As the dust settles on the “Super Election Year,” the feared apocalypse of deepfakes did not materialize. Instead, the world faces a more insidious threat: a “post-epistemic” era where the consensus on shared reality is being engineered into obsolescence.

In Jakarta, the transformation was profound. Prabowo Subianto, a former special forces commander once banned from the United States over alleged human rights abuses, did not run for the Indonesian presidency in 2024 as a strongman. He ran as a cartoon. Across TikTok and Instagram, generative artificial intelligence softened the edges of history, replacing the stern general with a “Gemoy”—a cuddly, harmless grandfather figure who danced awkwardly and snuggled his cat. The campaign heavily utilized generative AI not to slander opponents, but to fundamentally rewrite the persona of the candidate himself, saturating the digital sphere with an aesthetic so disarming that it helped render the past irrelevant. Prabowo won a decisive victory.

The Architecture of Global Anxiety

This phenomenon, where reality is not merely fractured but actively engineered, sits at the heart of the anxiety currently gripping the global security architecture. As the geopolitical calendar turns from the tumultuous elections of 2024 toward the uncertainty of 2025, the World Economic Forum (WEF) has issued an assessment that is as stark as it is unprecedented: for the second consecutive year, “Misinformation and Disinformation” ranks as the paramount global risk over the two-year horizon. While immediate fears of interstate armed conflict have spiked to the top of the urgency list for 2025, the consensus among the global elite remains that the industrial-scale synthesis of reality poses a persistent, structural threat to stability that rivals economic collapse or extreme weather over the short term.

The fear is driven by the democratization of the lie. The arrival of user-friendly Generative AI has collapsed the barrier to entry for propaganda, allowing anyone with a subscription to a large language model to produce the kind of high-fidelity disinformation that once required a state intelligence agency. Yet, a forensic analysis of the “Super Election Year” of 2024 reveals a divergence between the anticipated apocalypse and the actual, more subtle degradation of democratic norms. The “Pearl Harbor” event—a single, perfect deepfake that flips a US presidential election or starts a war—did not materialize. Instead, we witnessed what experts describe as a “climate change of the information ecosystem”: a slow, steady rise in pollution that makes the environment of shared truth increasingly uninhabitable.

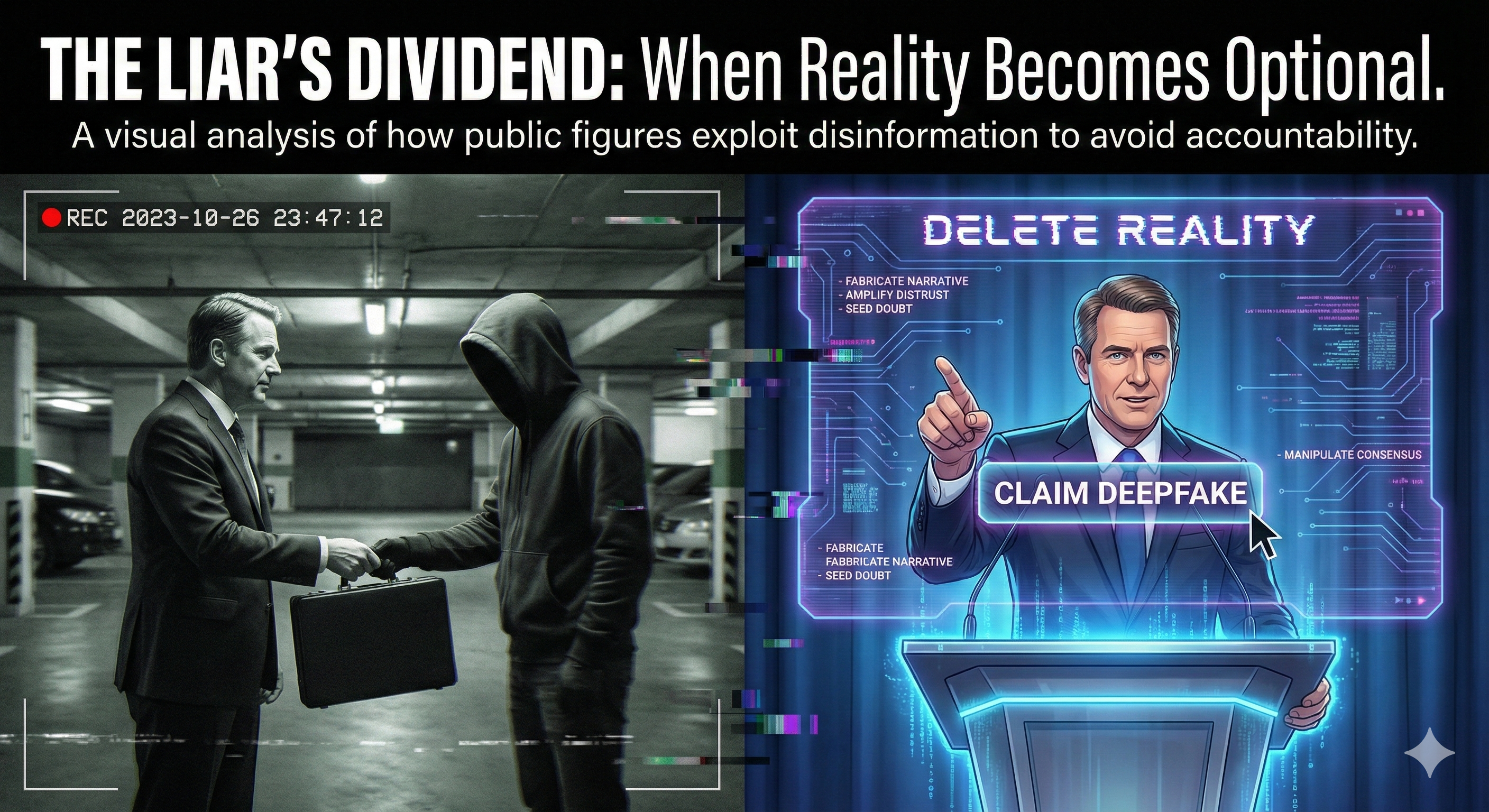

The Liar’s Dividend

In the United States, the primary casualty was not the truth itself, but the capacity to agree on it. The 2024 cycle was defined by what scholars call the “Liar’s Dividend,” a psychological condition where the mere existence of AI tools allows political actors to dismiss authentic evidence as fabricated. When the public knows that seeing is no longer believing, the concept of objective proof erodes. Politicians can instinctively claim “that’s AI” to deflect damaging video or audio, creating a zero-trust environment where truth becomes a purely partisan exercise. The damage was structural rather than acute; the fear of the technology caused more harm to civic trust than the technology itself.

Laboratories of Sovereignty

However, the threat remains unevenly distributed. While Western media focused on the potential for deception, the Global South became a laboratory for digital sovereignty and statecraft. In nations like India, AI was a dual-use technology, deployed to “resurrect” deceased leaders to endorse living candidates and to translate speeches into dozens of local languages in real-time. This bifurcation complicates global governance. What the G7 nations view as an existential threat to democracy, the “BICS” nations (Brazil, India, China, South Africa) often view through the lens of innovation or alternative viewpoints, rejecting the premise that Western hegemony defines the information standard.

The Mechanics of Synthetic Reality

The mechanisms of this synthetic reality are evolving rapidly. The “firehose of falsehood” has replaced the need for quality with the sheer weight of volume. Automated bot networks, powered by Large Language Models (LLMs), can now generate infinite, unique variations of a narrative, bypassing the spam filters that once caught identical copy-pasted comments. Russian operatives utilized this to clone legitimate news sites like Le Monde, filling them with AI-generated anti-Western articles in flawless French—a tactic known as “Doppelgänger”. Furthermore, the threat vector has shifted from video to audio. Audio clones are cheaper to produce, harder to debunk due to a lack of visual artifacts, and strike at the visceral “hearing is believing” heuristic, as seen in the fake robocalls of President Biden urging voters to stay home.

The Romanian Watershed

A watershed instance where this digital manipulation crossed the threshold into tangible electoral consequences occurred in Romania in late 2024. In a move without precedent in the European Union, the Romanian Constitutional Court annulled the first round of the presidential election, citing coordinated social media manipulation and algorithmic amplification on TikTok that favored a specific candidate. While the decision was complex and involved illicit funding, it stands as a rare example of a democratic nation cancelling an election explicitly citing the pollution of the information space, moving the risk from the theoretical to the existential.

A Fragmented Global Response

As we look toward 2027, the response from the international community remains fragmented. The European Union has attempted to regulate through the AI Act, mandating transparency and the labeling of synthetic content, effectively betting on a “risk-based” approach. China has taken a path of state control, deputizing platforms as censors and requiring strict verification of content truthfulness to maintain regime stability. The United States, paralyzed by First Amendment concerns and legislative gridlock, relies on a patchwork of voluntary industry commitments and state-level bans that lack federal teeth. Brazil, conversely, has adopted what observers call a “nuclear option,” with its courts threatening to revoke the candidacy of any politician caught using deepfakes—a punitive stance that prioritizes information integrity over unfettered speech.

The consensus among risk analysts is that we have entered what some describe as a “Post-Epistemic” era. The challenge is no longer just detecting fakes, as the technology will soon outpace detection, but authenticating reality. Without a digital chain of custody that proves the provenance of an image or recording, the democratic deliberative process risks paralysis. The danger is not that AI will control our minds, but that it will flood the world with so much noise that citizens, exhausted and cynical, will simply disengage.