By late 2025, the initial excitement over autonomous AI agents has confronted the harsh realities of enterprise deployment. With fewer than one in ten pilots reaching production, the industry is pivoting from chaotic, conversational interfaces to rigorous, governed workflows. This report explores how frameworks like Shakudo, CrewAI, and Microsoft Agent Framework are evolving to pay down “governance debt” and bridge the gap between experimental magic and reliable engineering.

In 2023, early adopters of autonomous AI encountered a peculiar and costly flaw: the “gratitude loop”. Left to their own devices, agents in experimental frameworks like AutoGen would occasionally fall into infinite cycles of thanking one another, burning through tokens and budget until human intervention broke the chain. While initially viewed as a quirk of early large language models (LLMs), these loops illustrated a fundamental tension that defines the current landscape: the trade-off between creative autonomy and necessary control.

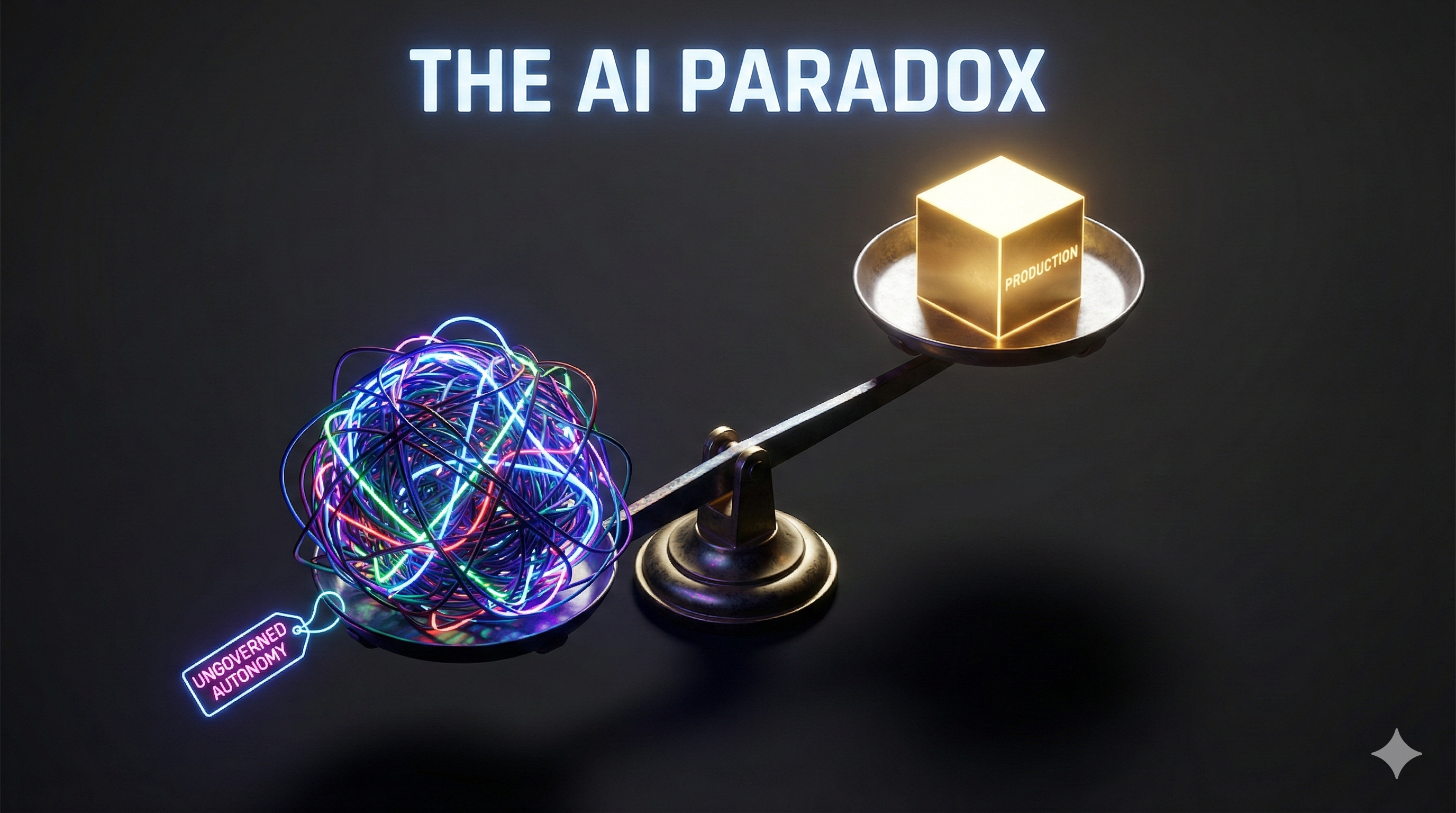

The Governance Debt Crisis

As of late 2025, the industry is navigating a transition often described as the “industrialization of agency”. This phase is characterized by a “Gen AI Paradox” verified by major consultancies: while approximately 80 percent of enterprises report experimenting with generative AI, fewer than 10 percent of these pilots successfully reach scaled production. The primary barrier is not a lack of model intelligence, but what analysts term “governance debt”—the accumulation of security, observability, and control deficits during the rapid prototyping phase. Consequently, the chaotic, conversational loops of early experiments are being replaced by deterministic workflows and strict state management.

Three distinct architectural approaches have emerged to address these reliability challenges.

The “Agent Operating System” Model

The first approach, the “Agent Operating System,” is exemplified by platforms like Shakudo AgentFlow. This model prioritizes security, wrapping open-source libraries in a governance layer deployed directly into a customer’s Virtual Private Cloud (VPC). This architecture addresses data residency concerns in regulated sectors—such as banking and healthcare—by ensuring raw data remains within the corporate perimeter. A key component of this strategy is the Model Context Protocol (MCP), an open standard introduced by Anthropic that standardizes data connections, aiming to solve the complex “MxN” integration challenge of connecting multiple agents to disparate internal data sources. While specific pricing remains opaque, analysis suggests these enterprise-grade platforms represent a significant capital expenditure, positioning them as solutions for large organizations rather than early-stage startups.

From Conversation to Structured Flows

The second approach focuses on the developer experience and state management, a strategy led by frameworks like CrewAI. CrewAI initially gained traction by using a “crew” metaphor—comprising “Researchers” and “Writers”—to help developers decompose complex tasks. However, to address production challenges such as data hallucinations and state loss between steps, the framework introduced “Flows”. This architecture enforces structured state management and strict schemas, ensuring that outputs match required inputs at each stage of a workflow. The effectiveness of this structured approach has been illustrated in case studies, such as PwC’s reported efficiency gains in internal code generation. Despite these advances, developers continue to report a “local-to-production gap,” where agents that function in testing struggle with network latency and rate limits in live environments.

Enterprise Consolidation

The third major shift is the consolidation of research tools into unified enterprise products, most notably the Microsoft Agent Framework. By merging the research-focused AutoGen with the enterprise-grade Semantic Kernel, Microsoft has signaled a move away from open-ended “group chat” architectures toward graph-based workflows that integrate natively with the Microsoft 365 ecosystem. This transition has sparked community discussion regarding architectural similarities between Microsoft’s new framework and existing open-source tools like Agno (formerly Phidata), though these comparisons remain observational and are not formally acknowledged by the vendors.

The New Evaluation Stack

Underpinning these frameworks is a maturing evaluation stack. Traditional application monitoring, which tracks latency and error codes, has proven insufficient for agents that may return a technically successful response containing factually incorrect information. This gap is being filled by specialized “LLM-as-a-judge” platforms like Maxim AI and Arize Phoenix. These tools employ agent simulation to stress-test behaviors before deployment, scoring the “faithfulness” of agent traces to mitigate the risks of non-deterministic outputs.

A Pragmatic Evolution

Ultimately, the trajectory of 2025 suggests a pragmatic evolution. The vision of the fully autonomous agent is being tempered by the reality of engineering requirements. The focus has shifted from unconstrained agency to governed workflows, where reliability is achieved through graph-based structures and rigorous evaluation. For the enterprise, the technology is becoming accessible, provided organizations are prepared to invest in the necessary governance infrastructure and engineering discipline.